When robots knock, media barons build higher walls, not better businesses.

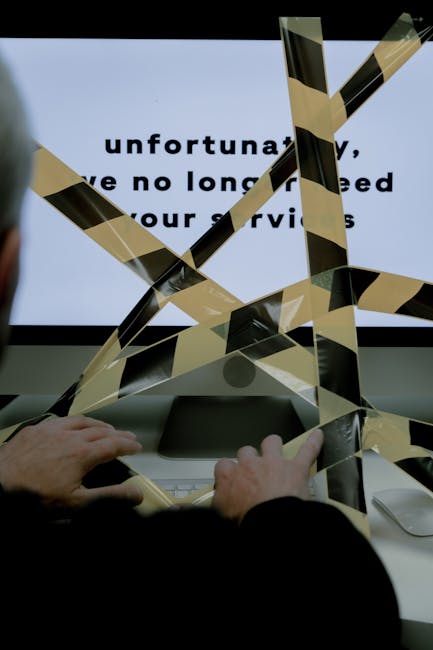

The familiar CAPTCHA gatekeeper has become corporate Britain’s latest canary in the coalmine. This week’s revelation that News Group Newspapers deploys stern warnings about automated access, data mining, and artificial intelligence should surprise precisely no one who follows media’s slow motion collision with technological reality. What appeared as a mundane user verification screen actually functions as a Rorschach test for our digital decay. We find in its pixels the entire tragicomedy of legacy publishers trying to lock barn doors decades after the data horses bolted.

Let’s acknowledge the technical reality obscured by the legal saber rattling. Any publication hosting open web content while claiming to restrict machine readability engages in fiscal fiction writing. The Internet Archive alone holds over 70 petabytes of crawled media content available through its 'Wayforward Machine', a digital library that makes mockery of hollow 'no scraping' clauses. Meanwhile, third party data brokers happily sell subscription free access to this 'protected' content to investment banks and government agencies at premium prices. The illusion of control persists because most publishers lack both the resources and will to enforce their terms, relying instead on the threat of legal action against entities they can actually identify, typically well funded tech firms with deeper pockets than sense.

Here emerges our first delicious hypocrisy, that publishers demonize AI training while quietly licensing content to the highest bidder through backchannel data deals. Industry insiders whisper of major news brands negotiating private agreements with large language model developers even as their lawyers draft cease and desist letters to startups. This performative outrage follows a well worn playbook: publicly battle Silicon Valley to appease regulators, privately collect checks when nobody’s looking. The Financial Times reported last month that several undisclosed media conglomerates are lobbying for strict AI legislation while simultaneously running pilot programs with generative AI content tools.

Nor should we ignore the grand theatre of protecting 'original journalism' while simultaneously eroding its foundation. Newsrooms that once employed hundreds of specialist reporters now rely on rehashed press releases and AI generated summaries. A recent City University study found local newspaper editorial teams reduced by 81 percent since 2005, with remaining staff increasingly tasked with search engine optimisation over investigation. The noble defense of creative work rings hollow when publications themselves deploy article spinning software to milk shrinking archives. It’s difficult to take piracy complaints seriously from companies aggressively automating their own content pipelines.

The human toll of this cognitive dissonance unfolds quietly behind the scenes. Journalists find themselves caught between publishers demanding more viral content and managers blocking tools that could ease their workload. Investment analysts privately admit news groups make terrible equity bets because they refuse to adapt business models. Consumers face increasingly poorer AI applications as training data becomes constrained by arbitrary corporate borders, yielding chatbots that hallucinate news events while trained on sanitized datasets. Economic stability suffers when vital public records exist behind paywalls incompatible with democracy’s need for accessible information.

This brings us to law’s greatest inadequacy in the digital age. The blunt instrument of copyright cannot solve business model obsolescence. Recent proposed amendments to the Digital Services Act attempting to mandate 'fair compensation' for AI training data represent more desperate flailing than coherent strategy. Legal scholars note such measures would require fundamentally rewriting international copyright frameworks established before most executives owned email accounts. Even if successful, the revenue generated would amount to financial aspirin for an industry hemorrhaging relevance.

Publishers conveniently forget how their own disruptive ancestors operated. The 18th century newspaper barons appropriated content mercilessly, with rival publications routinely pilfering stories days after release. Modern outrage over scraping elects to ignore this history of content appropriation being the rule rather than exception until quite recently. The current protectionist stance requires ignoring how media fortunes were built on distribution monopolies now shattered by technology.

Perhaps the most tragicomic element remains publishers fighting yesterday’s war. Conversational AI has already evolved beyond needing fresh article scraping for improvement. Cutting edge models generate synthetic training data to refine their responses, while alternative data sources enable sophisticated analysis without touching news sites. The world’s largest AI labs now prioritize clean room datasets over messy real time web content. News Groups’ digital blockade looks increasingly like building a fortress against cavalry charges in the age of stealth bombers.

What truly emerges from this CAPTCHA kerfuffle is an industry-wide failure to comprehend digital economics. Value in the information age flows not from restricting access but controlling context, not from hoarding data but orchestrating attention. Spotify understood this in music, as Getty Images is learning in photography. Yet legacy news executives still treat content like Victorian factory owners guarding machinery blueprints. Their solution to technological disruption remains legal threats rather than product innovation, proving Clayton Christensen’s innovator’s dilemma thesis beyond reasonable doubt.

The path forward requires honesty our media barons appear unwilling to embrace. Either news content possesses sufficient unique value that consumers will pay directly for access through subscriptions and micropayments, making scraping irrelevant, or advertising revenue remains critical, necessiting maximum distribution including through search engines and AI applications demanding tolls. Trying to have both an open yet walled garden, a premium yet mass market product, represents strategic confusion at best and delusion at worst.

As the news industry enters its 'blockbuster video' phase, watching streaming platforms disrupt their physical rental model while denying transformation was necessary, these legal skirmishes feel increasingly performative. The CAPTCHA warnings serve primarily to reassure shareholders that management is 'doing something' about digital threats. But no volume of threatening legalese can mask the simple truth: a business model based on controlling distribution died with the dial up modem. Publishers clutching their pearls about AI scraping while their own reporters use ChatGPT to draft stories resembles climate protesters chaining themselves to private jets.

Perhaps it’s time we acknowledged the elephant in the server room. Legacy publishers cling to scraping lawsuits and anti AI rhetoric because facing real transformation requires admitting their core competencies became liabilities. It’s easier to chase hypothetical licensing revenue than develop products people willingly pay for. And so the digital walls go up, the lawyers get richer, and the slow demise continues. The robots, one suspects, will outlast us all.

By Edward Clarke

By Edward Clarke