When algorithms learn manners before humans do

Imagine a world where artificial intelligence could evaluate if a police officer said 'please' often enough during your speeding ticket encounter. Researchers at the University of Southern California seem determined to make this dystopian sitcom premise reality through their Everyday Respect Project, which essentially functions as Miss Manners for law enforcement interactions. Only instead of correcting salad fork misuse, they're training algorithms to spot proper traffic stop etiquette.

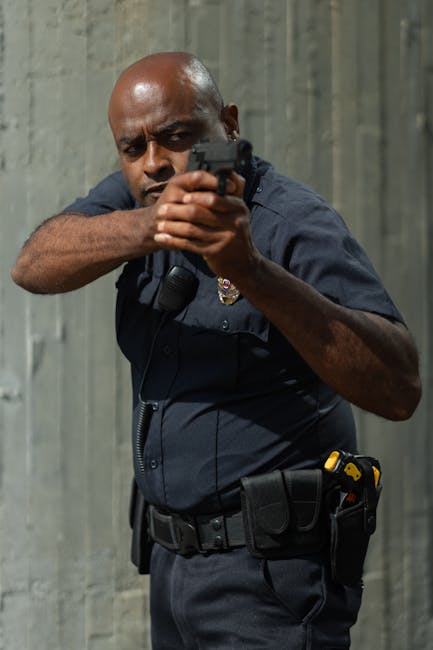

The initiative hooks body camera footage from thousands of Los Angeles police traffic stops into machine learning systems, hoping to computationally define what makes interactions feel respectful to civilians. It's like a Turing test for cop civility. Theoretically, natural language processing could identify verbal patterns that de escalate confrontations while computer vision assesses posture and spatial awareness.

One initial complication emerges immediately upon examining the methodology. Researchers first had to gather human focus groups including both community members and retired officers to co define what actually constitutes respectful communication. This collaborative anthropology work reveals an obvious tension. The equivalent of asking foxes and chickens to jointly design predator proof coop security protocols.

Early evaluators within the project analyzed footage using this blended framework. Researchers then input these human assessments to train AI models that will eventually scan 30,000 traffic stop videos autonomously. The technology promises unprecedented scale in policing analytics, revealing behavioral patterns no single human researcher could catalogue across thousands of encounters.

Beneath the surface conflict resolution premise, however, lies a recursive irony. Officers already perform for body cameras through the well documented phenomenon of camera aware behavior modification. Now we introduce an AI scoring system for officer etiquette. Future cops might subtly angle their lapel cameras toward their smiling faces during stops and employ wearily patient customer service voices while internally steaming. One can envision precincts holding mandatory 'How to Score Well on the Courtesy Algorithm' in service training.

This creates a Schrödinger's cop paradox. Is the politeness genuine or algorithmic theater? The more important question is whether it even matters if the interaction feels safer to the driver. Researchers want to identify objectively measurable markers respect, pinning down subjective experiences through technology in their quest for procedural justice. Perhaps a worthy moonshot, but one prone to oversimplification.

The project framework practically demands this reductionism. Machine learning needs quantifiable inputs to operate. Researchers may tag phrases like 'explains reason for stop clearly' or 'acknowledges inconvenience' as positives while flagging 'interrupting driver repeatedly' or 'standing unnecessarily close' as negative indicators. But human communication features fractal complexity. Does an officer asking 'How are you today, sir?' signal authentic engagement or disingenuous formality? Can machines detect contempt dripping through a technically polite request for license registration?

Then comes the bias problem. If training datasets contain predominately white, middle class perspectives on appropriate interactions relatively insular university focus groups might skew respectful behaviors along cultural lines they recognize best. Officers policing diaspora communities might employ communication styles that scan as 'respectful' to algorithms but misinterpret local cultural norms. Fixating on verbal rapport could miss crucial environmental factors like whether officers approach vehicles weapons drawn during routine stops.

More concerning still involves the fundamentally adversarial nature of traffic stops. Even impeccably polite citations still result in fines and legal jeopardy for drivers. No amount of 'good communication' eliminates this inherent tension built into the system. Respectful disappointment remains disappointment. AI scoring high on etiquette metrics might perfectly accompany racially biased policing patterns.

Financial backers of this technological foray add another layer of intrigue. Tech giants like Google and Microsoft along with various research foundations fund the initiative. These companies maintain their own complex histories with AI ethics controversies like facial recognition systems that misidentify darker skinned individuals. Private sector involvement always carries unspoken strings. What does Microsoft gain from funding police communication analytics? Enhanced public image certainly. Potential law enforcement contracts for algorithmic training tools possibly. A shield against regulation by positioning as reformers perhaps.

None of this invalidates the core attempt to improve policing. Footage analysis revealed several predictable patterns. Stops initiating with clear explanations of violations rather than immediate demands for compliance devices automate better community responses. Officers who identify themselves properly and give citizens space to respond verbally foster smoother encounters. The digital panopticon might promote accountability simply through expected observation. These findings all trend positive.

But consider the chain reaction effects of institutionalizing 'respect algorithms'. Departments could weaponize high courtesy scores during use of force hearings. "Your honor, my client scored 94% on last month's de escalation metrics." Civil liberties groups worry traffic stops offer pretextual justifications for investigative fishing expeditions. Perfect etiquette accompanying racial profiling remains unacceptable.

Officers themselves may balk at becoming customer service representatives whose professional worth gets reduced to aggregated politeness percentages. Undoubtedly many individuals joined law enforcement to fight violent crime, not deliver five star traffic stop experiences. Heavy focus on palatable encounters could divert needed resources from tackling more systemic rot within departments.

The ultimate promise involves scaling insights nationwide. Machine learning models that generalize patterns applicable across jurisdictions suggest universality in preferred police conduct. But Los Angeles demographics and policing challenges differ dramatically from rural Iowa. Cultural values around authority vary between immigrant communities and military towns. Canned politeness risks going over like regional managers mandating store greeters in bad neighborhoods.

Researchers seem aware technological tools alone won't fix policing. Their goal remains merely understanding what facilitates mutual respect during everyday encounters. They appear admirably committed to transparency, planning public releases of the raw tools and methodology. Properly contextualized, these insights might indeed inform better training protocols. Unfortunately, algorithms invariably oversimplify.

Backers suggest AI analysis could also highlight superior officer conduct, improving morale through positive reinforcement. That sunny perspective somewhat ignores how institutions historically use surveillance tools. More likely, herculean efforts would focus on coercively remediating low scores while barely acknowledging high performers. Such is bureaucratic nature.

Technocratic solutions appeal to our era of big data promises. They generate measurable outcomes that satisfy audit requirements. However, reducing human interactions to binary respectful disrespectful categories inevitably flattens nuance. True systemic reform requires acknowledging that no amount of perfectly executed stops compensates for racially disproportionate policing or brutal responses to minor offenses elsewhere in the system.

Perhaps this project's greatest contribution emerges from spotlighting inherent contradictions. Traffic stops were never really about public safety they were revenue generation engines and fishing expeditions. Policing reform was never going to be solved by algorithms measuring verbal tics. Yet here we are, like deep sea explorers mapping the ocean floor with a ruler.

Do better communication patterns help, yes. Should officers display basic courtesy and transparency during encounters, absolutely. But we delude ourselves imagining these tweaks address foundational problems. It's like installing ergonomic chairs on the Titanic. Ultimately the water keeps rising.

By Tracey Curl

By Tracey Curl